Aim

My aim is to study the movement of the human mouth, and create an interactive product suitable for teaching deaf children the basics of sign language. I hope to research the industry that this would fit into, and analyse any similar existing products, to ensure that there would be demand for mine.

Introduction

There are currently over 45,000 deaf children living in the UK, of which 20,000 use British Sign Language (BSL). There are thought to be a further 125,000 adults using BSL. Around 90% of the children are born to hearing parents, who have little or no experience in dealing with deafness. On average 4 babies are born deaf each day, meaning there is a large global requirement for other forms of communication, other than auditory. Many deaf children find it difficult to initially communicate with families and friends, which can then lead to a slower pace of learning within their peers. This is unnecessary as deafness is not a learning disability, and there should be no reason why a deaf child should develop language skills slower than a child with hearing. However, deaf children are more susceptible to mental illness in later life as a result of lack of communication.

Research Aim

My research aim is to create an interactive CD-ROM, that teaches the basics of British Sign Language, (BSL), to deaf children via the means of animation. I feel that this product would improve communication between both people who are hard of hearing and those who are not. The product will be initially aimed at children, with the potential to lead to a wider market. This could include people losing their hearing in later life or to family members and friends to communicate with the hearing impaired. The product will aim to teach sign language in English, but would leave open the possibilities for other languages to follow suit. The reason for this product is that I feel that children should learn to communicate visually from a young age, to further aid them in their learning at such a vital development stage in their lives.

The product would hope to teach both children and adults, so the information I provide will have to be simple for each to understand.

- Study how the human mouth moves.

- Research and decide what would be the most useful words to teach to the children.

- Research and practice lip-syncing technologies for use in my product.

- Research how lip-syncing is used within the industry.

- Analyse pre-existing similar products to include the benefits, and leave out the negatives within my product.

- Research and create a suitable character for presenting the product to children.

Study how the human mouth moves.

This image can be found at:

http://minyos.its.rmit.edu.au/~rpyjp/a_notes/mouth_shapes_01.html

This image can be found at:

http://minyos.its.rmit.edu.au/~rpyjp/a_notes/mouth_shapes_02.htmlObviously this example shows very basic heads, which are not as helpful for adding detail to the faces. For example, the ‘oo’ and ‘o’ phonemes look almost identical in the front view, and it would not be easy to distinguish between these without sound. The side view however, shows the lips are very different. Although my animation will be in 3D, I may have to ensure multiple angles of the mouth are visible.

I then discovered a further set of mouth shapes at:

http://www.garycmartin.com/mouth_shapes.html

These mouth shapes are a lot more useful to me as these are more realistic than the cartoon, or sketched examples above. You can see a lot more detail in each of the mouths, showing proper placement of tongue and teeth, as well as the lips. These examples do not show any of the muscular activity surrounding the mouth, such as jaw movement.

In order to get the best reference possible for my research, I decided to use my own mouth as a primary video reference. I felt that this would allow me to analyse the different mouth shapes exactly as I hope to animate.

A, E, I, O, U

C, D, G (video shortened through upload)

F, V

K, N, R, S, Y, Z

L, Th, D

M, B, P

W, Q

I am glad I took these video references, as I have been able to pronounce specific letters, rather than classifying multiple letters under the same phoneme. For example, 'A' and 'I' have very similar mouth shapes, but by looking at the video you can see there is a lot more happening in the face than just the mouth shape. The tongue sets back into the mouth more for 'I', but small details like this go unnoticed with the secondary references I found. However, these differences are surely important for a child to know.

Also, looking at video, 'L, Th, D', I pronounced the large and small letters for L and D, just to show how different the same letter can be, depending on its position in a word. When I make an 'L' sound, my tongue is thinner, and goes to the back of the teeth. When making a 'Th' sound, however, the tongue starts extended beyond the teeth and is brought back into the mouth.

I feel that with these references, I now have enough information about how the human mouth moves to make a reasonable prototype for my product.

Research and decide what would be the most useful words to teach to children.

After doing some researching into sign language, I have decided that for our product, we should teach finger spelling, as this provides a basic communication skill amongst sighted people. Finger spelling is fairly straightforward to learn, and having only 26 actions means that the alphabet can be picked up fairly quickly. Below is an image showing the correct hand positions for a right-handed finger speller:

The reason for only teaching the fingerspelling alphabet in our product is that sign language varies dramatically depending on which country, or even city, you are communicating in. Many people question why not just communicate in finger spelling all the time, but this would be impractical. Although there would be only 26 gestures to learn, having a conversation would be very time consuming, and it would be far more difficult to portray emotion and tone. Sign language is a far more effective means of communication when compared with fingerspelling, but is much more complicated to learn. The main objective of our product, however, is to aid deaf children in communicating from as soon as they possibly can. By teaching fingerspelling, we are also allowing the children to show more ingenuity, in the fact that if a child is unsure how to communicate a word, they can simply spell the word using individual letters. We feel this is more helpful than learning specific categories.

The words are split into the grade at which the children should learn the words. There are also an additional 95 nouns listed here, which are not included in The Dolch Word List, or The Dolch 220.

I feel that this collection of words would be ideal for teaching to the deaf children our product is aimed at, as they are among the most commonly found words in the English language and most importantly do not require sound. There are many websites online advertising products to help teach children to read, all using The Dolch List. These websites contain interactive games and learning tools to teach children to read, but are not necessarily aimed at children with partial to no hearing at all.

Research and practice lip-syncing technologies for use in my product.

To present the product in a useful, interesting way, I will be creating a 3D head, to mouth the letters used when fingerspelling. This will accompany fully rigged hands, which will demonstrate the hand gestures used for each letter. I have researched various tutorials online about how to create a head in 3D, and also how to lip sync this character. I will need to find the most effective form of lip-syncing for my product.

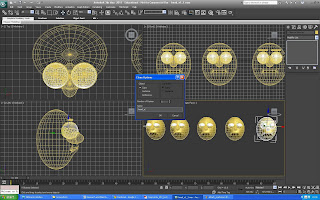

This screenshot shows the 3D head I made for my prototype.

There are numerous ways to animate speech in 3D, one of which is using morph targets. I will be using morph targets for my prototype, as I am currently familiar with using 3DS Max, and morph targets are a good tool to use within this software. I am wanting to use software which is available to me at my university, as funds are limited for me and I wish to make the most of the rendering capabilities they have there.

I have researched how to create morph targets to animate the lips moving in a realistic way. By creating morph targets, you can make the computer ‘tween’ the animation between 2 different mouth shapes. With the original mouth closed, you can preset different mouth shapes to represent the letters. There are however, only 9 different mouth shapes used when pronouncing letters, and each letter will only be 1 syllable each. This means that any mouth position would have to come from the original mouth shape with lips closed.

This image shows the initial head model being cloned to form the different mouth shapes used in speech. I have restricted myself to making a total of 10 heads, as this is a prototype and I am just testing to see if this would be the best style of lip-syncing for my product.

This image shows the 10 heads lined up ready to have morph targets assigned to them:

The master head is selected and each of the other heads are selected in turn and assigned to a slider. This slider works on a percentage basis where 0% is the original head, and 100% is the assigned head. By dragging this slider anywhere between 0-100%, the initial head will morph depending on how far.

This final image shows that the morph targets have worked, and the initial head has now morphed to replicate the second head:

I have rendered out some tests, showing the morph targets working. My first test was to make sure I had morph targets working properly, so I created eyelids for the character's head to give the illusion of blinking.

This video was my initial test to see how the head would cope with spelling the vowels, 'A, O, E'.

After analysing these videos and renders, I have decided that I feel Motion Capture would be the best choice for the final outcome of this product. Motion capture is far superior for capturing details as it is taken from a human realistic reference. Provided the actor had proper pronunciation and articulation, then Motion Capture would record the data digitally, and then this information would be assigned to the head I will create.

Motion Capture is the term used to describe the recording digitally, of realistic motion and transferring of this data onto a digital model. It allows animators to capture life-like accuracy without the requirement for keyframing. Cameras are set up to capture the perspective of the actor being filmed, and transfer this information to a virtual 3D space. Markers on the actor are recognised by the computer, and thus we can assign markers to specific areas of the body. When motion is carried out, the computer will simulate how the markers move on screen. In my opinion, this is a very useful, time effective animation technique, but not all animators feel this way. Where some would even argue that Motion Capture is not animation at all, merely a technique impersonating animation. Michael Scorn, a great animator, wrote this in his blog:

'The “animators” have become interchangeable and almost irrelevant.

You aren’t able to define anyone’s animation style behind any of Tom Hanks’ characters in Polar Express. You can only see Tom Hanks or Savion Glover in Happy Feet.'

http://www.michaelspornanimation.com/splog/?p=935

I have found links showing where each of the markers needed should go on the face, and how to properly name them:

http://softimage.wiki.softimage.com/index.php/Motion_Capture_in_Face_Robot#Motion_Capture_Marker_Placement

http://softimage.wiki.softimage.com/index.php/Motion_Capture_Marker_Naming

There is also a step by step guide on how to capture face motion data here:

http://softimage.wiki.softimage.com/xsidocs/face_act_TheMotionCaptureProcess.htm

Here is a link to a video showing Face Motion Capture, where the face has a marker at each area of motion. I have posted this link to show the difference in detail between my keyframe animation and this motion capture animation.

http://www.youtube.com/watch?v=QGEFmv0jXGU

I have been looking into facial rigging and motion capture usage for animating the face for my product. This link below is for a blog by Chris Evans, where he explains about using Stabilization (STAB) markers on the temples and nose bridge, to keep the head still when recording data from the face.

http://www.chrisevans3d.com/pub_blog/?cat=12

This image shows the actor with his STAB markers on. This process would be very helpful when using motion capture in my next module - the implementation of this product. The product will be far easier to understand if the head remains still whilst the face moves. Paying particular attention to the mouth, jaw, cheeks and tongue will enable the children to realise what part of the mouth should move for a certain letter or word.

This image shows a computer generated head, with markers shown digitally on the face:

This is a similar looking outcome to what I hope for, but with a slightly more cartoon-like character to appeal to younger children. This will have the correct amount of markers, to capture human realistic expressions and phonemes.

I have found a forum where members are discussing which form of animation is better, keyframe animation or motion capture.

http://forums.cgsociety.org/archive/index.php/t-2119.html

I have decided to weigh up the pros and cons of keyframe animation vs. Motion Capture:

Keyframe Animation

Positives

This video was my initial test to see how the head would cope with spelling the vowels, 'A, O, E'.

By looking at the video, you can see that the morph targets are working, and that it is obvious to see each of the 3 mouth shapes are different.

I have questioned the relevance of blinking within the prototype as it is not important at this stage. The mouth and surrounding area is the most important for this product, so I will focus on mouth phonemes from now on.

I then rendered a video test for each of the morph targets, so that I could see how similar the animation was to the video reference of my mouth.

A

E

I

O

U

F, V

M, B, P

L, Th, D

W, Q

K, N, R, S, Y, Z

This video, 'References and Renders: Phonemes', shows each of the phonemes next to the original reference for easy analysis. The video reference is on the left hand side, and I have attempted to sync the animated clip with the audio from the reference as best I could.

By watching this video, I have been able to analyse which of the phonemes work well, and which do not resemble what they are supposed to much at all. Particularly, the consonants are not the most accurate, as there is just 1 phoneme for each of the letters, 'K, N, R, S, Y, Z'. Although a person with average hearing would perhaps not notice as much, deaf children would certainly notice that the mouth was the same for each letter. This would perhaps be confusing to the children, and probably not help them to develop their lip reading skills.

In order to find out how the prototype would manage to mouth words, I selected 4 words from The Dolch List for my morph targets to mime.

Although I feel this method of animation would work well for many other types of keyframe animation, I think it would be a very long and tedious task to make many different morph targets, not only for each letter, but for each sound that can be created using that letter. There is also the matter of getting all of the information across without merging, or missing any. I think that because this product has such a specific aim, hoping to improve peoples communication skills, the final product should be more detailed and accurate. Especially if children will be using the lessons they have learnt from this CD-ROM throughout later life.In order to find out how the prototype would manage to mouth words, I selected 4 words from The Dolch List for my morph targets to mime.

This video shows a lot more going on with the morph targets, and rather than spelling one letter at a time, the whole word is attempted. I think that the words work a lot better than the individual letters, because there are multiple morph targets in motion to get across the entire word. Rather than having multiple letters assigned to one mouth, the head can morph between multiple targets, giving a more independant look to the mouth.

The first instance, 'Milk 1', looks quite strange. The lips do a rippling like motion which does not seem accurate. Each of the phonemes used in Milk were key framed with the entire 100% reached on the morph target sliders. In order to fit all 4 morph targets in the allotted time, the morphs overlap each other and there are multiple shown at one time. 'Milk 2', does not have morphs going to 100%. The amount they reach was decided depending on the how prominent the phoneme was. The difference between, 'Milk 1' and 'Milk 2' is that the tongue is not visible on the 'L' of 'Milk 2', which shows that all of the detail does not get through if the slider is not pushed to 100%.

The word 'But', does not start with the correct mouth shape. Although it is the letter, 'B', the sound is with a lower case, 'b'. The top lip protrudes slightly, and then the lips roll inside the mouth facing the opposite way to how they initially started. Such minute details would be extremely difficult to capture through keyframing and it would be impossible to make a morph target for every possible mouth shape.

I feel the word 'Cat', works rather well, as the mouth is only required to open and close, but the tongue and teeth placement is necessary to distinguish proper articulation.

'Because', has a similar problem to 'But', with the 'b' sound at the beginning leading into the lip roll. The width of the mouth appears to be fine for the next phoneme, but the emphasis on the 'u' is not clear. This may be because the renders have been filmed from one camera angle. I hope to include either the option to pan around the character in the interactive CD-ROM, or multiple views to see front and side articulation.

After analysing these videos and renders, I have decided that I feel Motion Capture would be the best choice for the final outcome of this product. Motion capture is far superior for capturing details as it is taken from a human realistic reference. Provided the actor had proper pronunciation and articulation, then Motion Capture would record the data digitally, and then this information would be assigned to the head I will create.

Motion Capture is the term used to describe the recording digitally, of realistic motion and transferring of this data onto a digital model. It allows animators to capture life-like accuracy without the requirement for keyframing. Cameras are set up to capture the perspective of the actor being filmed, and transfer this information to a virtual 3D space. Markers on the actor are recognised by the computer, and thus we can assign markers to specific areas of the body. When motion is carried out, the computer will simulate how the markers move on screen. In my opinion, this is a very useful, time effective animation technique, but not all animators feel this way. Where some would even argue that Motion Capture is not animation at all, merely a technique impersonating animation. Michael Scorn, a great animator, wrote this in his blog:

'The “animators” have become interchangeable and almost irrelevant.

You aren’t able to define anyone’s animation style behind any of Tom Hanks’ characters in Polar Express. You can only see Tom Hanks or Savion Glover in Happy Feet.'

http://www.michaelspornanimation.com/splog/?p=935

I have found links showing where each of the markers needed should go on the face, and how to properly name them:

http://softimage.wiki.softimage.com/index.php/Motion_Capture_in_Face_Robot#Motion_Capture_Marker_Placement

http://softimage.wiki.softimage.com/index.php/Motion_Capture_Marker_Naming

There is also a step by step guide on how to capture face motion data here:

http://softimage.wiki.softimage.com/xsidocs/face_act_TheMotionCaptureProcess.htm

Here is a link to a video showing Face Motion Capture, where the face has a marker at each area of motion. I have posted this link to show the difference in detail between my keyframe animation and this motion capture animation.

http://www.youtube.com/watch?v=QGEFmv0jXGU

I have been looking into facial rigging and motion capture usage for animating the face for my product. This link below is for a blog by Chris Evans, where he explains about using Stabilization (STAB) markers on the temples and nose bridge, to keep the head still when recording data from the face.

http://www.chrisevans3d.com/pub_blog/?cat=12

This image shows a computer generated head, with markers shown digitally on the face:

This is a similar looking outcome to what I hope for, but with a slightly more cartoon-like character to appeal to younger children. This will have the correct amount of markers, to capture human realistic expressions and phonemes.

http://forums.cgsociety.org/archive/index.php/t-2119.html

I have decided to weigh up the pros and cons of keyframe animation vs. Motion Capture:

Keyframe Animation

Positives

- Requires skill. An animator must have ability and patience to make keyframing look attractive.

- A particular frame can be copied if required saving time, alterations can be made to make each frame look unique.

- An animation can be easily altered if something is not quite right. Frames can be dragged to the appropriate position.

Negatives

- Very tedious work. Lots of time spent fine-tuning each keyframe.

- Can be difficult to animate speed accurately.

- Fewer companies still practicing keyframe animation within industry.

Motion Capture

Positives

Positives

- Very good for replicating human behaviour/motion.

- When used in commercial production - very high quality with great attention to detail.

- Great for capturing real life speed

- Useful for showing off modelling/texturing techniques if keyframing is found difficult.

- Motions can be layered enabling keyframe-like qualities.

- Most CG TV-shows have switched to Motion Capture - large majority of the industry.

Negatives

- Usually looks like human motion, even if its not supposed to be.

- Less focus on the animators vision - a character will often be associated with its live actor.

As you can see from this list, I have found more positives for Motion Capture, and also less negatives. Motion Capture appears to be the best technique for animating my characters face.

Research how lip-syncing is used within the industry.

There are many different uses for lip-syncing within the film, games, music and other multimedia industries. Lip-syncing is the term for matching audio clips with lip movement within an animation and can refer to a number of different techniques.

Lip-syncing allows for virtually created characters to seem real. Over time, virtual characters have become more and more popular, and these days we see them being used for online teachers, tour guides, and any other digital form where a human figure had to be used before. In the film, 'The Polar Express', there is lots of motion capture data of Tom Hanks. 'The Polar Express' is the first film to successfully utilize facial motion capture for the entire duration of a computer generated movie. 'Avatar', also uses MOCAP.

It is noticeable from the screenshot how accurate the motion capture data is in relation to the reference used to create it. Motion capture is being used more and more in modern times, as actors can be recognised without even being on set.

In many cases, such as within film, lip syncing is required to convert the footage in multiple languages so that the products can be marketed globally.

The 'International Dubbing Group', offer dubbing and post production facilities that aim to provide foreign language dubbing and subtitling services to those working in live action, interactive products and animation.

http://www.idgus.com/

This company does not share very much information about how they work and would rather customers contact them individually so that they can work on a project specifically for them.

There are also many areas within the games industry that involve lip-syncing. The company which claims to be at the top is 'Annosoft'.

http://www.annosoft.com/

According to the homepage:

'Annosoft is the leading provider of automatic lipsync technologies for game and multimedia development. Our customers include some of the biggest names in the game industry.'

'Annosoft' have worked on some big game titles such as Red Faction, Fable 2 and Rage. In order for these games to have become as successful as they did, the lip-syncing must have been great to keep viewers entertained.

Lip-syncing is not only done by large companies however. Across the world there is an abundance of freelance animators with similar skills. There are many different lip-syncing softwares available to buy, but these are aimed more at animation studios who have large budgets to acquire them, as most independent animators cannot always afford to purchase these. These high charges seem unfair at first, but when you realise how much money can be made by incorporating lip-syncing into a product, it seems only right that the software developers should see a share of this wealth.

This website gives a list of software available to purchase for automated lip-syncing:

http://www.3dlinks.com/links.cfm?categoryid=1&subcategoryid=71&CFID=50748423&CFTOKEN=12312558

Due to this increase in demand, animators must use lip-syncing technologies to make their characters seem the most realistic on the market. This has meant that there is a vast range of lip-syncing software available for purchase. One I have found which looks useful is 'Visage Technologies'.

Lip-syncing allows for virtually created characters to seem real. Over time, virtual characters have become more and more popular, and these days we see them being used for online teachers, tour guides, and any other digital form where a human figure had to be used before. In the film, 'The Polar Express', there is lots of motion capture data of Tom Hanks. 'The Polar Express' is the first film to successfully utilize facial motion capture for the entire duration of a computer generated movie. 'Avatar', also uses MOCAP.

It is noticeable from the screenshot how accurate the motion capture data is in relation to the reference used to create it. Motion capture is being used more and more in modern times, as actors can be recognised without even being on set.

In many cases, such as within film, lip syncing is required to convert the footage in multiple languages so that the products can be marketed globally.

The 'International Dubbing Group', offer dubbing and post production facilities that aim to provide foreign language dubbing and subtitling services to those working in live action, interactive products and animation.

http://www.idgus.com/

This company does not share very much information about how they work and would rather customers contact them individually so that they can work on a project specifically for them.

There are also many areas within the games industry that involve lip-syncing. The company which claims to be at the top is 'Annosoft'.

http://www.annosoft.com/

According to the homepage:

'Annosoft is the leading provider of automatic lipsync technologies for game and multimedia development. Our customers include some of the biggest names in the game industry.'

'Annosoft' have worked on some big game titles such as Red Faction, Fable 2 and Rage. In order for these games to have become as successful as they did, the lip-syncing must have been great to keep viewers entertained.

Lip-syncing is not only done by large companies however. Across the world there is an abundance of freelance animators with similar skills. There are many different lip-syncing softwares available to buy, but these are aimed more at animation studios who have large budgets to acquire them, as most independent animators cannot always afford to purchase these. These high charges seem unfair at first, but when you realise how much money can be made by incorporating lip-syncing into a product, it seems only right that the software developers should see a share of this wealth.

This website gives a list of software available to purchase for automated lip-syncing:

http://www.3dlinks.com/links.cfm?categoryid=1&subcategoryid=71&CFID=50748423&CFTOKEN=12312558

Due to this increase in demand, animators must use lip-syncing technologies to make their characters seem the most realistic on the market. This has meant that there is a vast range of lip-syncing software available for purchase. One I have found which looks useful is 'Visage Technologies'.

'Visage Technologies' offers products which help to animate virtual characters.

The about us on the website claims that:

'Visage Technologies AB offers innovative technology and services for applications involving computer generated virtual characters and computer vision for finding and tracking faces and facial features in images and video.

The company has strong roots in the research community. The founders were among the main contributors to the MPEG-4 Face and Body Animation International Standard. Visage Technologies fosters these relationships by promoting research collaboration and offering free or discounted software licenses to academic institutions. Together with a strong R&D effort, this emphasises our commitment to stay at the cutting edge of innovative technology. ' - Visage Technologies

visage|SDK™ VISION package is the new software from 'Visage Technologies'. The software includes:

- Facial Feature Detection - allowing you to find the location of a face in a still image.

- Facial Feature Tracking - finding and tracking the face and its parts - mouth contour, eyes and nose in video sequences.

- Head Tracking - tracking the head in video sequences, returning the position and rotation of the head.

- Eye Location Tracking - finding and tracking eyes within a video sequence.

- Eye Tracking - measuring the point or gaze, or motion of an eye relative to the head.

This information can be found at:

Analyse pre-existing similar products to include the benefits, and leave out the negatives within my product

After searching online for sign language and lip-reading software, I have found limited results. One website I came across called, ‘Seeing Speech’, contains videos by a trained hearing health professional which aid the viewer in lip-reading skills through a CD-ROM containing picture books, films and videotapes.

The link above shows the website. These clips use actual people to present the information, however, they seem very boring, and would probably not appeal to children. This product appears to be directed towards more mature viewers.

This next example is for an iPhone application that teaches sign language through a 3D animation with text on screen. It is called, 'iSign'.

This product appears to be useful, but the character used looks very realistic. I would question why this is, as a video of a real person would show more detail than a 3D imitation. My other dislike with this product is that not everybody can afford an iPhone, and therefore cannot use this product. The product again appears to be aimed at a more mature audience than mine.

There are several comments from customers on the website:

Difficult to understand

Many of these signs are nearly impossible to understand. And some of them are not ASL but signed English.

Good App But Needs More Words

I purchased this app over a year ago while taking an ASL class. I found it helpful mostly because I could practice with the quiz feature. I still use it from time to time to recall words. It's a good app.

I was hopeful that more words would be added by now though.

I was hopeful that more words would be added by now though.

Good App

Handy way to keep up with signing anywhere. I can't carry a large book around and I like the quizzes and categories. Cool App.

There is also another product similar to this called, 'iSign - Lite':

http://itunes.apple.com/us/app/isign-lite/id292486900?mt=8

There is another comment about this product:

Good!

This app was very handy, I don't understand why all u people hate it? It a LITE version!!!!! So of course there's not going to be a lot of words!!!! I think it is a good starting point for begginers:)

By the sounds of these comments, the product did not recieve such a great review, but some people claim that the product is good for beginners. I am unsure however, whether beginners means children, or adults learning to sign, as one would learn much faster than the other.The comment stating the product is difficult to understand is perhaps the one which concerns me the most, as this is our main goal. If the children using our product cannot understand the signs, then the product would be pointless.

After seeing these existing examples, I feel that there would still be demand for our product. The examples I have seen are not aimed at children, but have helped me to see how I could make my product better. By reading the customer reviews I can see that above everything, clarity is the most important aspect of the product. The viewer must be able to understand what information the character is trying to give across.

Research and create a suitable character for presenting the product for children.

For teaching fingerspelling to children, the character used must be both suitable and entertaining, to keep the children captivated. There are many factors to take into consideration when working with children, such as religious and moral issues. The character should have fun qualities that will inspire the children to want to watch, and learn. I have looked at pre-existing characters used for presenting information to children, and they all seem to be very simple, brightly colored, and come across friendly and kind to the child. If the child believes that the character is their friend, then they will be more susceptible to wanting to learn. According to Lev Vygotsky, play is pivotal for children's development, since they make meaning of their environment through play. This would tempt me to incorporate a playful style to the character I create. A good example of this is ‘Sesame Street’, which incorporates many activities within the show to keep the children entertained throughout.

Below are some pre-existing characters, which have been very successful in children’s entertainment:

http://coloringpagesforkids.info/dora-coloring-pages/

http://ben-10-alien-force.otavo.tv/

http://adweek.blogs.com/adfreak/2008/11/how-kids-really-like-their-cartoon-characters.html

The blog links to 3 separate articles:

Part 1

http://www.kidscreen.com/articles/news/20081027/gender.html?word=gender&word=forensics

Part 2

http://www.kidscreen.com/articles/news/20081029/genderii.html?word=gender&word=forensics

Part 3

http://www.kidscreen.com/articles/news/20081031/kids.html

The links above shows a study carried out by Dr. Maya Gotz of the International Central Institute for Youth and Educational Television in Germany. The study includes 19,664 kids shows from 24 different countries, and claims that only 32% of protagonists in childrens shows are female, compared to the 68% male. The survey then looks at non-human characters, and the ratio increases to 13% female and 87% male. The thoughts behind these figures are that girls find it much easier to relate to a male character on screen, than a boy who will not find a female role appealing until in later life.

The study then goes on to list several gender stereotypes it discovered. Women are usually:

- Beautiful, underweight and sexualized

- Motivated by romance

- Shown as dependant on boys

- Stereotyped depending on hair colour

Men, on the other hand are:

- Usually loners or leaders

- More frequently the antagonist

- Often overweight

- More often that not, Caucasian

- Stereotyped as one of four: the lonesome cowboy, dumb blockhead, clever small guy or the emotional soft boy.

Although these points may not seem fair, I have definitely noticed similar generalisations within children's cartoon characters. I will therefore take these points into consideration when designing my own characters.

The second section of the study talks about how girls are more susceptible to admire a character with life experiences similar to their own, and also admire characters who fail in the beginning, but manage to win in the end. Gotz states that females like to laugh, even if the joke reflects their own imperfections, as this can help them to work through their own problems. They appear to like characters who develop throughout the show, and become more mature, similar to how girls are so quick to grow up. They appreciate the complexities brought on by characters facing relationship issues, and how they can go about resolving these. With regards to body image, girls prefer kid characters to look like kids, rather than caricatured women. 1,055 girls were shown 3 images of a well known german female character who is a child. They were asked which image they preferred, a normal looking body type, a skinnier frame, and a large build. 70% chose the middle, average body type. The same survey was done on boys, and similar results were found. This shows that both genders would rather have a more natural, less sexualized female character.

In Part 3, Gotz goes on to say how boys prefer characters who have goals they must achieve. She says that this might be the reason why boys are attracted to the action/adventure content, and that the use of agression is useful for the protagonist to overcome their problems. However, violence can also be used to overcome conflict, in a more forceful way of problem-solving. Boys also like the quick-witted, character who quickly comes up with ideas, and can rid themselves of a bad situation. Examples given are Bart Simpson and Spongebob Squarepants. Gotz then asked 1,000 boys to draw their least favourite type of character. Bullies, weaklings, dummies and bad boys were amongst the most frequently drawn.

In Part 3, Gotz goes on to say how boys prefer characters who have goals they must achieve. She says that this might be the reason why boys are attracted to the action/adventure content, and that the use of agression is useful for the protagonist to overcome their problems. However, violence can also be used to overcome conflict, in a more forceful way of problem-solving. Boys also like the quick-witted, character who quickly comes up with ideas, and can rid themselves of a bad situation. Examples given are Bart Simpson and Spongebob Squarepants. Gotz then asked 1,000 boys to draw their least favourite type of character. Bullies, weaklings, dummies and bad boys were amongst the most frequently drawn.

After looking at the existing examples, and looking at the study by Gotz, I have decided to draw my own character designs for use in the final product. I made a very simple character head for my initial prototypes, but will be modelling a head using z-brush in my next semester at university. I am currently learning how to use this software in one of my current modules, and should be trained before I start this next project.

I initially started by drawing multiple quick sketches on a sheet of A4 paper to see if I came up with any ideas for characters. I have decided that I will create a male character, to fit with how cartoons are at present.

I then decided to begin working on actual characters which could be modelled for use in the final product. I started by drawing a yeti and a goose character, as I felt that animals could be a good way of presenting information to the children. However, the problem with the goose is that his mouth does not resemble that of a human, and would probably not be the most useful character to have.

I then decided to design some human characters, as these would be the best reference for the children.

I feel that these characters would not be that appealing to children. I think that the faces are not fun enough to attract the children's attention. Except for on the right, the expressions seem quite angry, and this may scare young children - especially if the character is in 3D and the animation is not smooth. I then thought why a character might become popular, and decided that cowboys are very popular amongst young boys. I decided to draw a design for a cowboy:

This cowboy would probably be appealing to boys, but its doubtful that young girls would want to learn sign language off a cowboy. They would be happier with a princess type character I would expect. This would not be sensible to have a character aimed at one gender, because if the product is to be used in schools, and other mixed gender establishments, then both genders should be thought about.

This is why I decided to draw 2 final designs for a young child character. The left design is in my own style of drawing, and I tried to make the right one seem more like an Anime character, as these are very popular amongst children's cartoons at present.

I have decided that I will use the character design on the left to progress with my project, and look forward to modelling and rigging a 3D character head to his specifications. The character is of a similar age to the children I hope for my product to attract, and he seems playful and cheeky.

Conclusion

I feel that my research carried out has provided me with enough information to create an attractive, interactive CD-ROM, with a character suitable for capturing children's attention, in the hopes that they will improve their communication skills.

With regards to my prototype, I feel that being as the model was created with the intention of teaching children to lip-read, the mouth should be the most important part. Each phonetic shape should be easily distinguishable to show the child the slight differences between specific letters and sounds. The teeth and tongue are barely visible in these animation tests, which I feel should be improved upon for the final product. The tongue is very helpful to humans as its muscular articulation is what enables us to speak. Phoneticians study the sounds of speech, and they categorize universal vowel sounds, by the position of the tongue in the mouth. The traditional phonetics system uses the high vowel, such as the ‘I’ in ‘sandwich’, to describe how the tongue arches towards the roof of the mouth, and the low vowel, such as the ‘a’ in ‘dark’, to describe a flatter, low position. You can see how the tongue I created for my prototype does not have enough detail to stress the precise positions used.

As this is the case, I feel that using Motion Capture would definitely be the best direction to take when implementing my final product. This would provide my character with great detail, and motion which would be an exact replica of human movement. This would therefore be far more useful to a child with impaired hearing, as they can reference the information the best they can. I have experience using Motion Capture whilst at university, and I got some exciting results. Although I have not practiced using facial markers, I am capable of setting up the scene, capturing the data and then assigning it onto the fully rigged character.

I think that, although extremely useful for my personal understanding of how the human mouth works, my video references were not as specific as I would have hoped. I have decided that for the next part of my project, I will seek the assistance of somebody with acting experience perhaps, so that they can articulate their words better than I can. Although the sounds are clear, the mouth does not look as natural as when somebody is in regular conversation. This would therefore not be fair to the deaf children, as they would be referring to something which is slower than life. It would be a good idea to include a speed option within our interactive CD-ROM, so that the children can learn at their own pace.

Bibliography

With regards to my prototype, I feel that being as the model was created with the intention of teaching children to lip-read, the mouth should be the most important part. Each phonetic shape should be easily distinguishable to show the child the slight differences between specific letters and sounds. The teeth and tongue are barely visible in these animation tests, which I feel should be improved upon for the final product. The tongue is very helpful to humans as its muscular articulation is what enables us to speak. Phoneticians study the sounds of speech, and they categorize universal vowel sounds, by the position of the tongue in the mouth. The traditional phonetics system uses the high vowel, such as the ‘I’ in ‘sandwich’, to describe how the tongue arches towards the roof of the mouth, and the low vowel, such as the ‘a’ in ‘dark’, to describe a flatter, low position. You can see how the tongue I created for my prototype does not have enough detail to stress the precise positions used.

As this is the case, I feel that using Motion Capture would definitely be the best direction to take when implementing my final product. This would provide my character with great detail, and motion which would be an exact replica of human movement. This would therefore be far more useful to a child with impaired hearing, as they can reference the information the best they can. I have experience using Motion Capture whilst at university, and I got some exciting results. Although I have not practiced using facial markers, I am capable of setting up the scene, capturing the data and then assigning it onto the fully rigged character.

I think that, although extremely useful for my personal understanding of how the human mouth works, my video references were not as specific as I would have hoped. I have decided that for the next part of my project, I will seek the assistance of somebody with acting experience perhaps, so that they can articulate their words better than I can. Although the sounds are clear, the mouth does not look as natural as when somebody is in regular conversation. This would therefore not be fair to the deaf children, as they would be referring to something which is slower than life. It would be a good idea to include a speed option within our interactive CD-ROM, so that the children can learn at their own pace.

Bibliography

National Deaf Children’s Society (NDCS)

http://www.ndcs.org.uk/

http://www.ndcs.org.uk/

British Sign Language

http://www.british-sign.co.uk/what_sign.php

http://www.british-sign.co.uk/what_sign.php

Wikipedia Insert – British Sign Language

http://en.wikipedia.org/wiki/British_Sign_Language

http://en.wikipedia.org/wiki/British_Sign_Language

Sign Language: the study of deaf people and their language

http://books.google.com/books?id=Gcy4MhmLhdkC&printsec=frontcover&dq=Sign+Language:+the+study+of+deaf+people+and+their+language&hl=en&ei=Si-uTL2gB5SL4gbT5430Bg&sa=X&oi=book_result&ct=book-preview-link&resnum=1&ved=0CDsQuwUwAA#v=onepage&q&f=false

http://books.google.com/books?id=Gcy4MhmLhdkC&printsec=frontcover&dq=Sign+Language:+the+study+of+deaf+people+and+their+language&hl=en&ei=Si-uTL2gB5SL4gbT5430Bg&sa=X&oi=book_result&ct=book-preview-link&resnum=1&ved=0CDsQuwUwAA#v=onepage&q&f=false

Deaf People and Linguistic Research

http://www.britishscienceassociation.org/web/news/reportsandpublications/magazine/magazinearchive/SPAArchive/SPAJune06/Feature6June06.htm

http://www.britishscienceassociation.org/web/news/reportsandpublications/magazine/magazinearchive/SPAArchive/SPAJune06/Feature6June06.htm

British Deaf Association

http://www.bda.org.uk/

http://www.bda.org.uk/

How to teach children BSL in a fun way?

http://uk.answers.yahoo.com/question/index?qid=20090107095605AATjjVB

http://uk.answers.yahoo.com/question/index?qid=20090107095605AATjjVB

Teaching Sign Language to parents of deaf children

http://www.eenet.org.uk/resources/docs/collins.php

http://www.eenet.org.uk/resources/docs/collins.php

Lip syncing in 3DS Max

http://www.tutorialhero.com/click-10961-basic_lip_syncing.php

http://www.tutorialhero.com/click-10961-basic_lip_syncing.php

Creating Morph Animations

http://update.multiverse.net/wiki/index.php/Creating_Morph_Animations_with_3ds_Max

http://update.multiverse.net/wiki/index.php/Creating_Morph_Animations_with_3ds_Max

How phonetics are spelt

http://esl.about.com/library/weekly/aa040998.htm

http://esl.about.com/library/weekly/aa040998.htm

The Tongue and Speech

http://health.howstuffworks.com/human-body/systems/nose-throat/tongue3.htm

http://health.howstuffworks.com/human-body/systems/nose-throat/tongue3.htm

Lev Vygotsky

http://en.wikipedia.org/wiki/Vygotsky

http://en.wikipedia.org/wiki/Vygotsky

Morph Target Animation (Wikipedia)

http://en.wikipedia.org/wiki/Morph_target_animation

http://en.wikipedia.org/wiki/Morph_target_animation

Audio to Video Synchronisation (Wikipedia)

http://en.wikipedia.org/wiki/Audio_to_video_synchronization

http://en.wikipedia.org/wiki/Audio_to_video_synchronization

Symmetrical Morph Targets Tutorial

http://www.tutorialized.com/view/tutorial/Symmetrical-Morph-Targets-3dsmax/24531

http://www.tutorialized.com/view/tutorial/Symmetrical-Morph-Targets-3dsmax/24531

Face Control and Reaction Master

http://www.3dtotal.com/team/Tutorials_3/video_facecontrol/facecontrol.php

http://www.3dtotal.com/team/Tutorials_3/video_facecontrol/facecontrol.php

Animation Lip-synchttp://minyos.its.rmit.edu.au/aim/a_notes/anim_lipsync.html

iSign iPhone Application

http://www.iphonefootprint.com/2008/10/iphone-sign-language-app-for-visual-communication/

http://www.iphonefootprint.com/2008/10/iphone-sign-language-app-for-visual-communication/

Seeing Speech

http://www.seeingspeech.com

http://www.seeingspeech.com

Preston Blair Mouth Shapes

http://minyos.its.rmit.edu.au/~rpyjp/a_notes/mouth_shapes_01.html

http://minyos.its.rmit.edu.au/~rpyjp/a_notes/mouth_shapes_01.html